How to Install Varnish and Test Web Server Benchmarking

Think for a moment about what happened when you browsed to the current page. You either clicked on a link that you received via a newsletter, or on the link on the homepage of Tecmint.com and then were taken to this article.

In a few words, you (or actually your browser) sent an HTTP request to the web server that hosts this site, and the server sent back an HTTP response.

As simple as this sounds, this process involves much more than that. A lot of processing had to be done server-side in order to present the nicely formatted page that you can see with all the resources in it – static and dynamic.

Without digging much deeper, you can imagine that if the web server has to respond to many requests like this simultaneously (make it only a few hundred for starters), it can either bring itself or the whole system to a crawl before long.

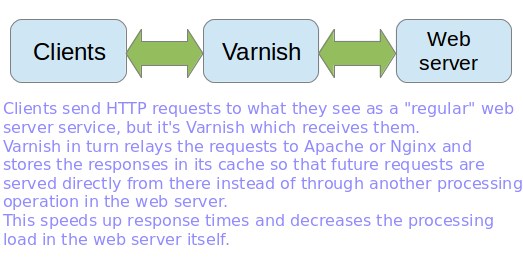

And that is where Varnish, a high-performance HTTP accelerator and reverse proxy, can save the day. In this article, I’ll explain how to install and use Varnish as a front-end to Apache or Nginx in order to cache HTTP responses faster and without placing a further load on the web server.

However, since Varnish normally stores its cache in memory instead of on disk we will need to be careful and limit the RAM space allocated for caching. We will discuss how to do this in a minute.

How Varnish Works

Installing Varnish Cache in Linux Server

This post assumes that you have installed a LAMP or LEMP server. If not, please install one of those stacks before proceeding.

The official documentation recommends installing Varnish from the developer’s own repository because they always provide the latest version. You can also choose to install the package from your distribution’s official repositories, although it may be a little outdated.

Also, please note that the project’s repositories only provide support for 64-bit systems, whereas for 32-bit machines, you’ll have to resort to your distribution’s officially maintained repositories.

In this article, we will install Varnish from the repositories officially supported by each distribution. The main reason behind this decision is to provide uniformity in the installation method and ensure automatic dependency resolution for all architectures.

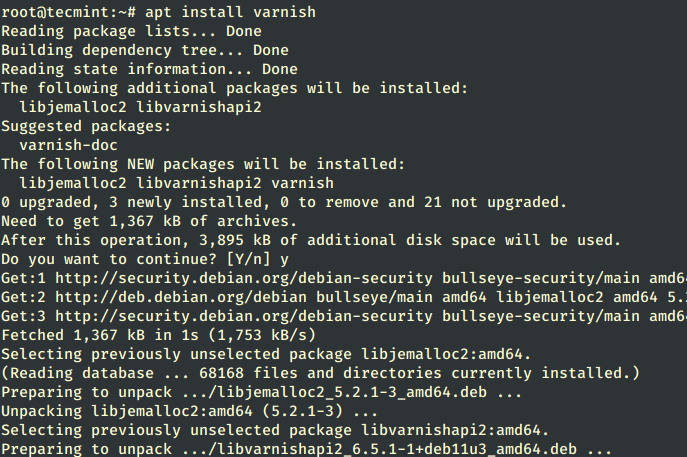

Install Varnish in Debian-based Linux

On Debian-based distributions, you can install Varnish using the apt command as shown.

# apt update # apt install varnish

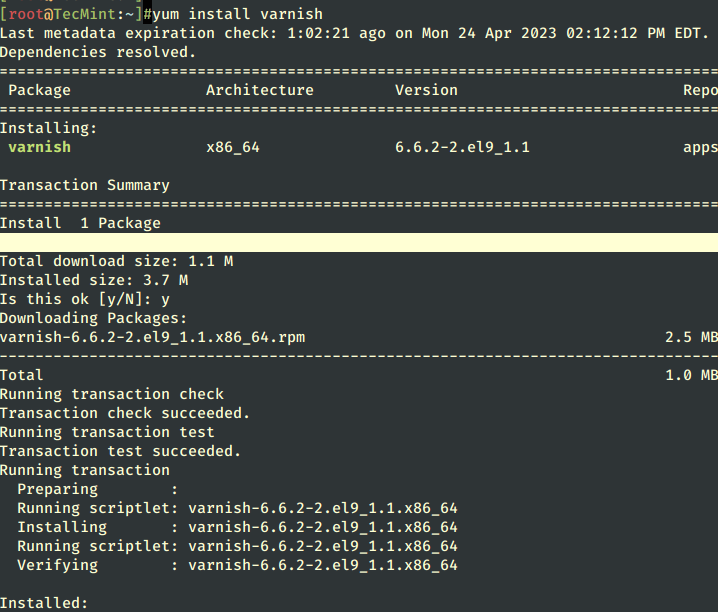

Install Varnish in RHEL-based Linux

On RHEL-based distributions such as CentOS, Rocky, and AlmaLinux, you will need to enable the EPEL repository before installing Varnish using the yum command as shown.

# yum install epel-release # yum update # yum install varnish

If the installation completes successfully, you will have one of the following varnish versions depending on your distribution:

# varnishd -V varnishd (varnish-6.5.1 revision 1dae23376bb5ea7a6b8e9e4b9ed95cdc9469fb64)

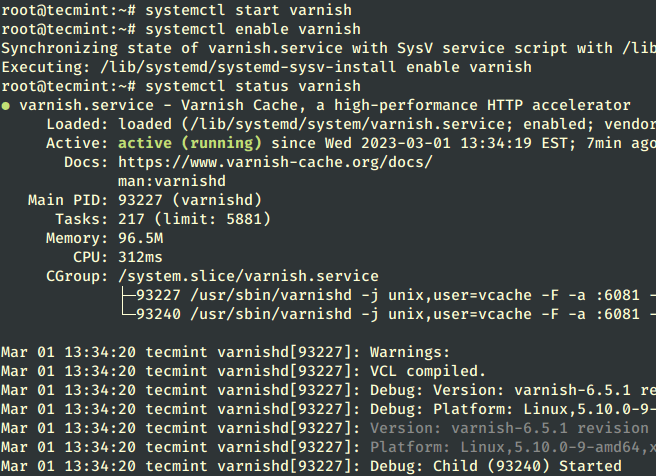

Finally, you need to start Varnish manually if the installation process didn’t do it for you and enable it to start on boot.

# systemctl start varnish # systemctl enable varnish # systemctl status varnish

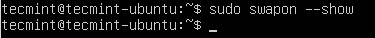

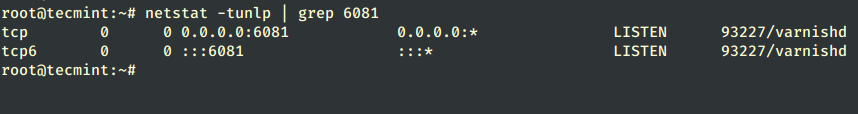

By default port 6081 is used by the varnish service and you confirm it by running the following netstat command.

# netstat -tunlp | grep 6081

Configuring Varnish Cache in Linux

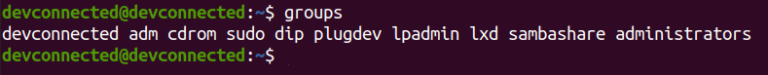

As we said earlier, Varnish stands in the middle of external clients and the web server. For that reason, and in order for the caching to become transparent to end users, we will need to:

- Change the default port 6081 where Varnish listens on to 80.

- Change the default port where the Web server listens to 8080 instead of the default 80.

- Redirect incoming traffic from Varnish to the web server. Fortunately, Varnish does this automatically after we have completed 1) and 2) steps.

Change Varnish Port

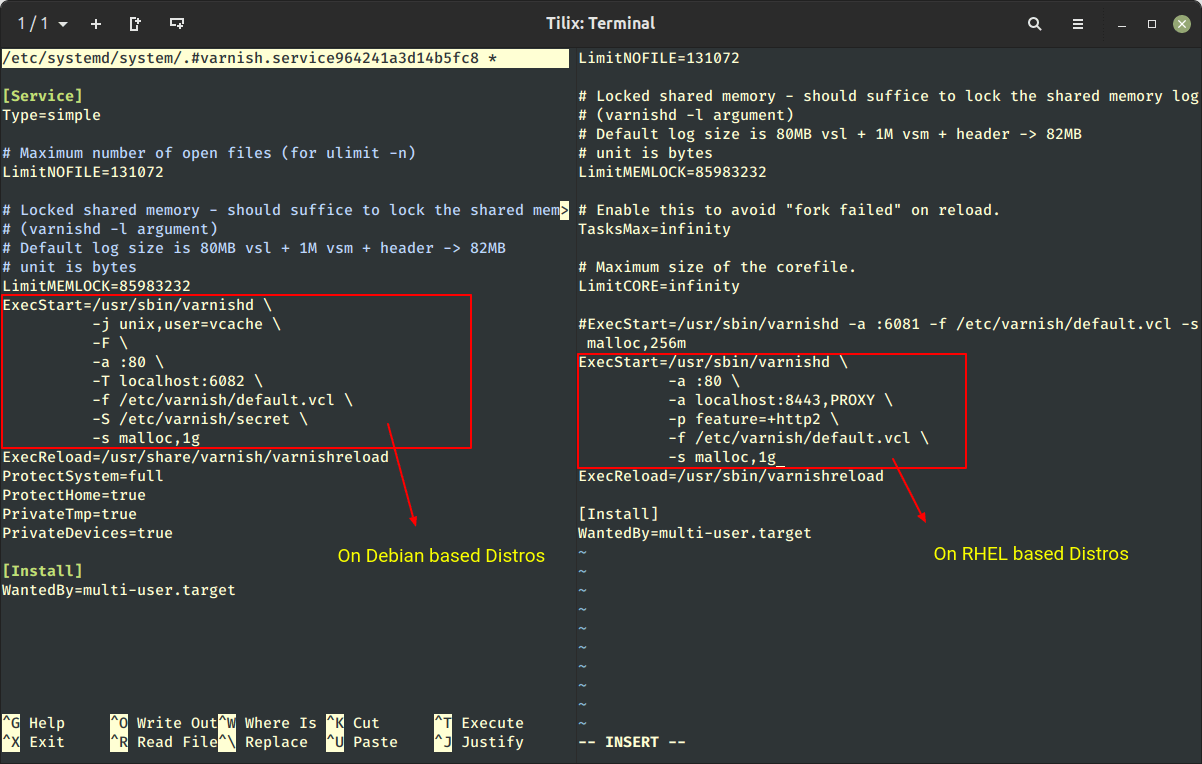

The varnishd process is controlled by systemd and has its unit file in /usr/lib/systemd/system/varnish.service, which holds the default Varnish runtime configuration.

Here, we need to change the default varnish port from 6081 to port 80 and the cache size to 1GB as shown by running the following command, which will open the unit file in the editor as shown.

Note: You can change the amount of memory as per your hardware resources or alternatively choose to save cached files to disk.

$ sudo systemctl edit --full varnish OR # systemctl edit --full varnish

After making changes to /etc/systemd/system/varnish.service file, you need to reload the Systemd daemon by running the following command:

# systemctl daemon-reload

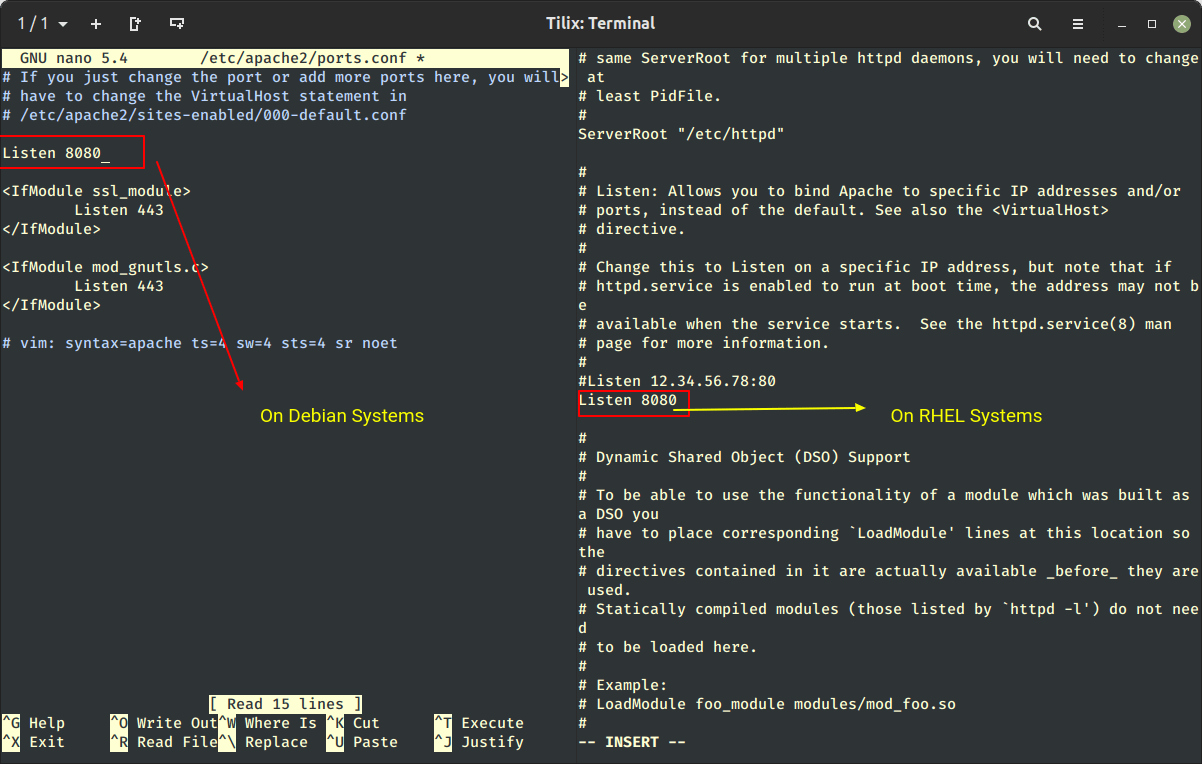

Change Apache or Nginx Port

After changing the Varnish port, now you need to change your Apache or Nginx web server port from default 80 to the most common networking port i.e. 8080.

---------- On Debian-based Systems ---------- # nano /etc/apache2/ports.conf [On Apache] # /etc/nginx/sites-enabled/default [On Nginx] ---------- On RHEL-based Systems ---------- # vi /etc/httpd/conf/httpd.conf [On Apache] # vi /etc/nginx/nginx.conf [On Nginx]

Once you have done changes, don’t forget to restart Varnish and the web server.

# systemctl restart varnish ---------- On Debian-based Systems ---------- # systemctl restart apache2 # systemctl restart nginx ---------- On RHEL-based Systems ---------- # systemctl restart httpd # systemctl restart nginx

Testing Varnish Cache in Linux

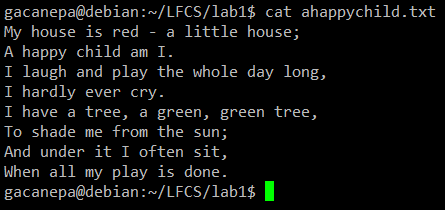

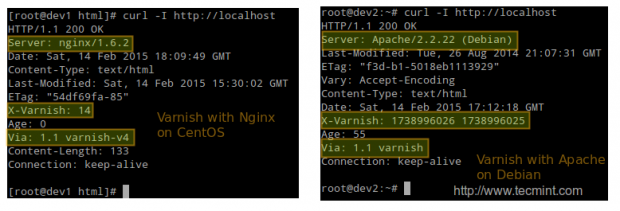

The first test consists in making an HTTP request via the curl command and verifying that it is handled by Varnish:

# curl -I http://localhost

Varnish also includes two handy tools too:

- View in real-time Varnish logs, intuitively called varnishlog.

- Display Varnish cache statistics, called varnishstat.

As a second test, in the following screencast, we will display both the logs and the statistics on a CentOS server (dev1, upper half of the screen) and on a Debian server (dev2, lower half of the screen) as HTTP requests are sent and responses received.

Test Web Server Performance Benchmark

Our third and final test will consist in benchmarking both the web server and Varnish with the ab benchmarking tool and comparing the response times and the CPU load in each case.

In this particular example, we will use the CentOS server, but you can use any distribution and obtain similar results. Watch the load average at the top and the Requests per second line in the output of ab.

With ab, we will be sent 50 (-c 50) concurrent requests at the same time and repeat the test 1000000 times (indicated by -n 1000000). Varnish will return a higher number of requests per second and a much lower load average.

Important: Please remember that Varnish is listening on port 80 (the default HTTP port), while Apache is listening on port 8080. You can also take note of the amount of time required to complete each test.

# ab -c 50 -n 100000 http://localhost/index.html

Conclusion

In this article, we have discussed how to set up a Varnish cache in front of a web server, Apache, or Nginx. Note that we have not dug deep into the default.vcl configuration file, which allows us to customize the caching policy further.

You may now want to refer to the official documentation for further configuration examples or leave a comment using the form below.