What is Technical SEO? 11 Best Practices

Technical SEO is the process of optimizing your website to help search engines like Google find, understand, and index your pages.

Although modern search engines like Google are relatively good at discovering and understanding content, they’re far from perfect. Technical issues can easily prevent them from crawling, indexing, and showing web pages in the search results.

In this post, we’ll cover a few technical SEO best practices that anyone can implement, regardless of technical prowess.

- Ensure important content is ‘crawlable’ and ‘indexable’

- Use HTTPs

- Fix duplicate content issues

- Create a sitemap

- Use hreflang for multilingual content

- Redirect HTTP to HTTPS

- Use schema markup to win ‘rich snippets’

- Fix orphaned pages

- Make sure your pages load fast

- Use schema to improve your chance of Knowledge Graph inclusion

- Don’t nofollow internal links

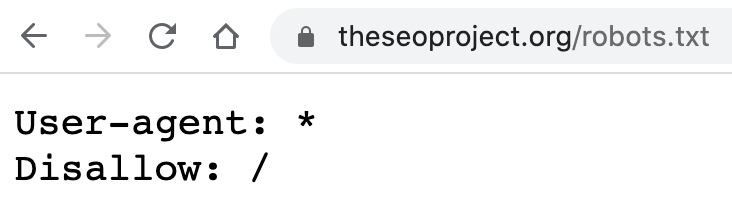

In the example above, those two simple lines of code block search engines from crawling every page on the website. So you can see how temperamental this file can be, and how easy it is to make costly mistakes.

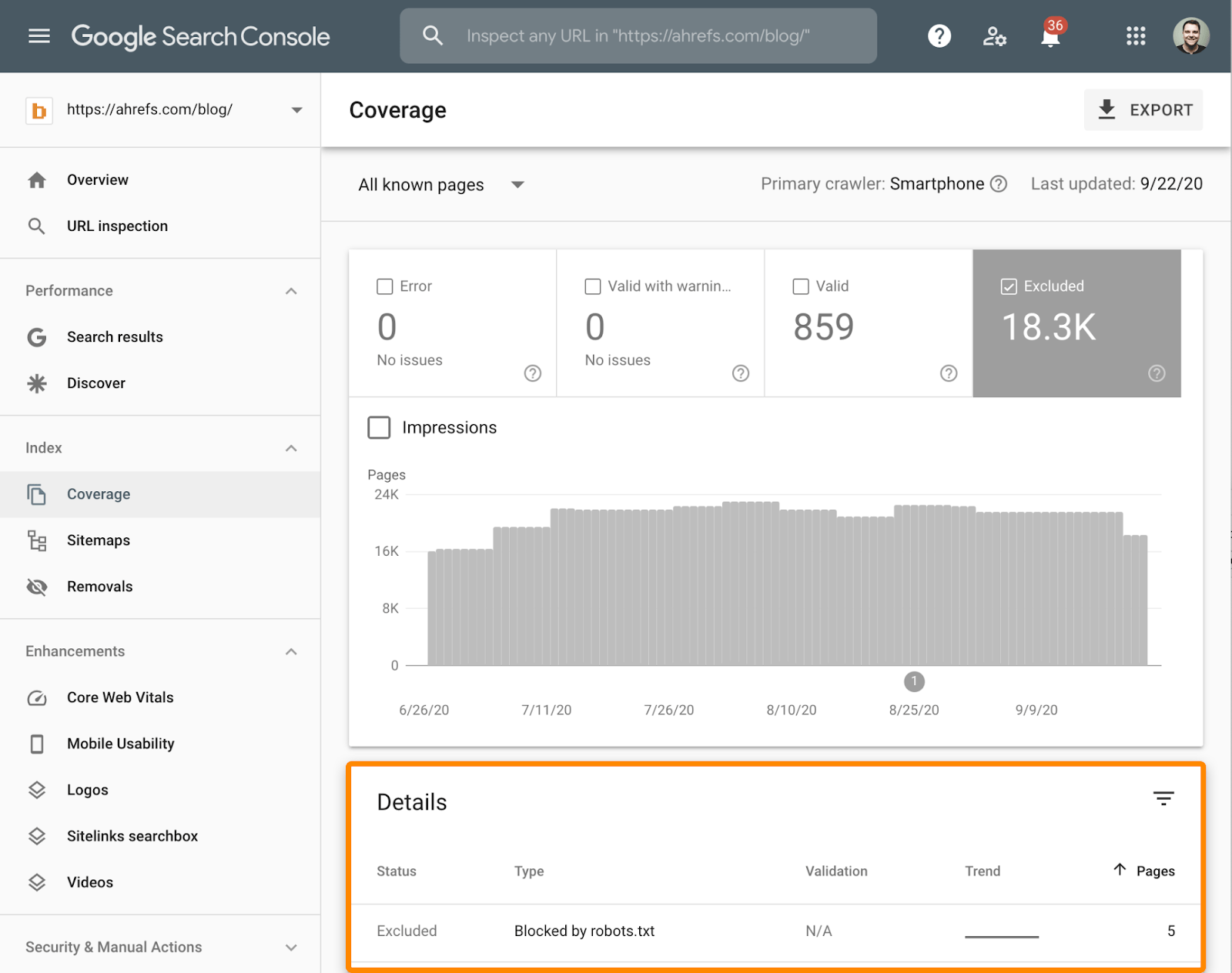

You can check which pages (if any) are blocked by robots.txt in Google Search Console. Just go to the Coverage report, toggle to view excluded URLs, then look for the “Blocked by robots.txt” error.

If there are any URLs in there that shouldn’t be blocked, you’ll need to remove or edit your robots.txt file to fix the issue.

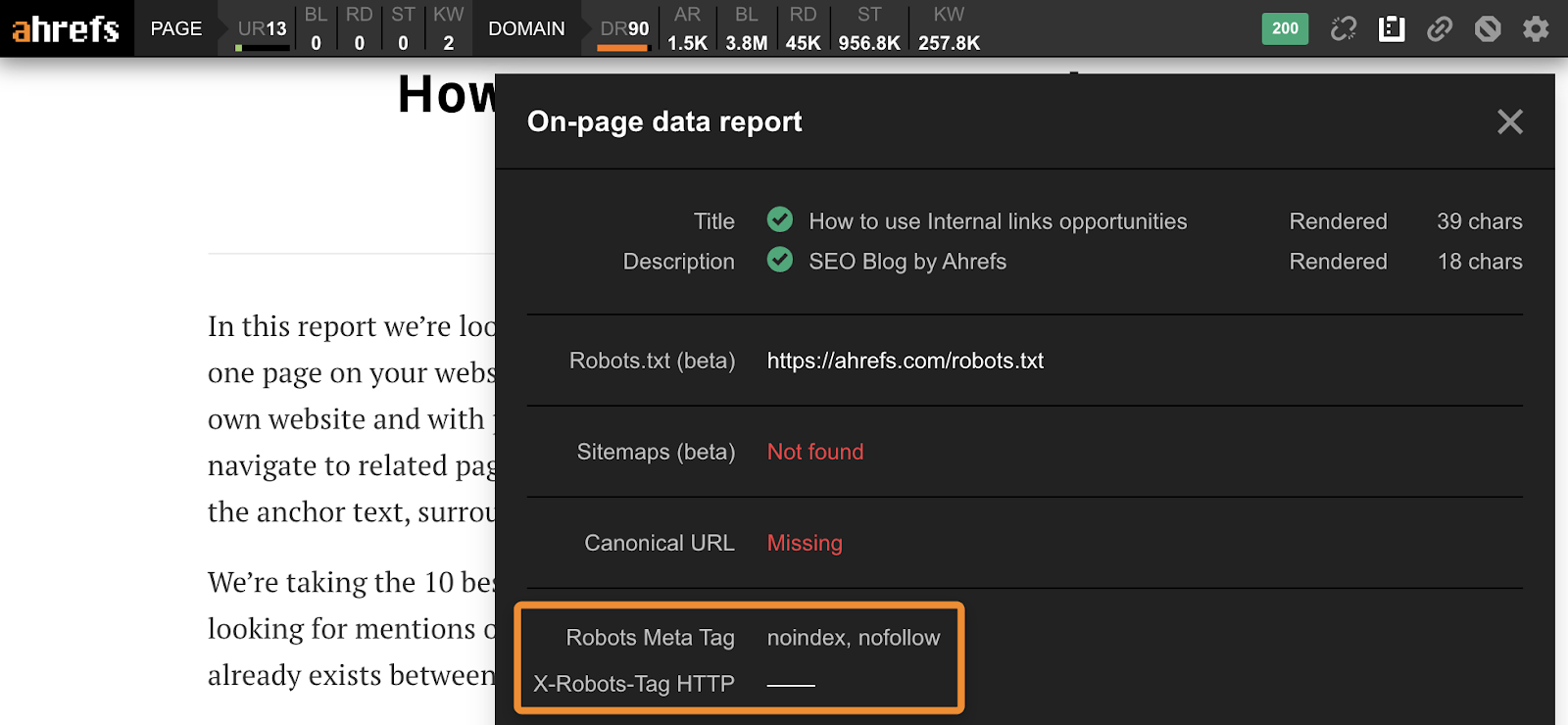

However, crawlable pages aren’t always indexable. If your webpage has a meta robots tag or x‑robots header set to “noindex,” search engines won’t be able to index the page.

You can check if that’s the case for any webpage with the free on-page report on Ahrefs’ SEO Toolbar.

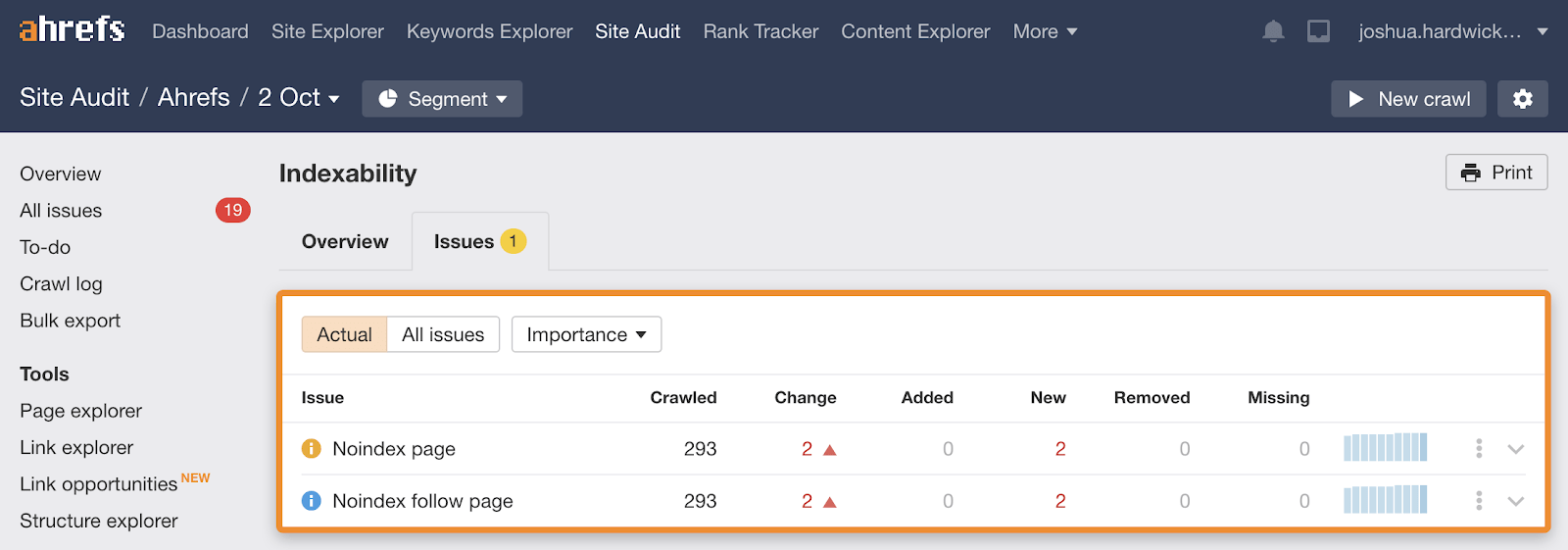

To check for rogue noindex tags across all pages, run a crawl with Site Audit in Ahrefs Webmaster Tools and check the Indexability report for “Noindex page” warnings.

Fix these by removing the ‘noindex’ meta tag or x‑robots-tag for any pages that should be indexed.

Further reading

Given the benefits of HTTPS for web users, it probably comes as no surprise that it’s been a ranking factor since 2014.

How do you know if your site uses HTTPS?

Go to https://www.yourwebsite.com, and check for a lock icon in the loading bar.

If you see a red ‘Not secure’ warning, you’re not using HTTPS, and you need to install a TLS/SSL certification. You can get these for free from LetsEncrypt.

If you see a grey ‘Not secure’ warning…

… then you have a mixed content issue. That means the page itself is loading over HTTPS, but it’s loading resource files (images, CSS, etc.) over HTTP.

There are four ways to fix this issue:

- Choose a secure host for the resource (if one is available).

- Host the resource locally (if you’re legally allowed to do so).

- Exclude the resource from your site.

- Use the HTTP Content Security Policy (CSP)

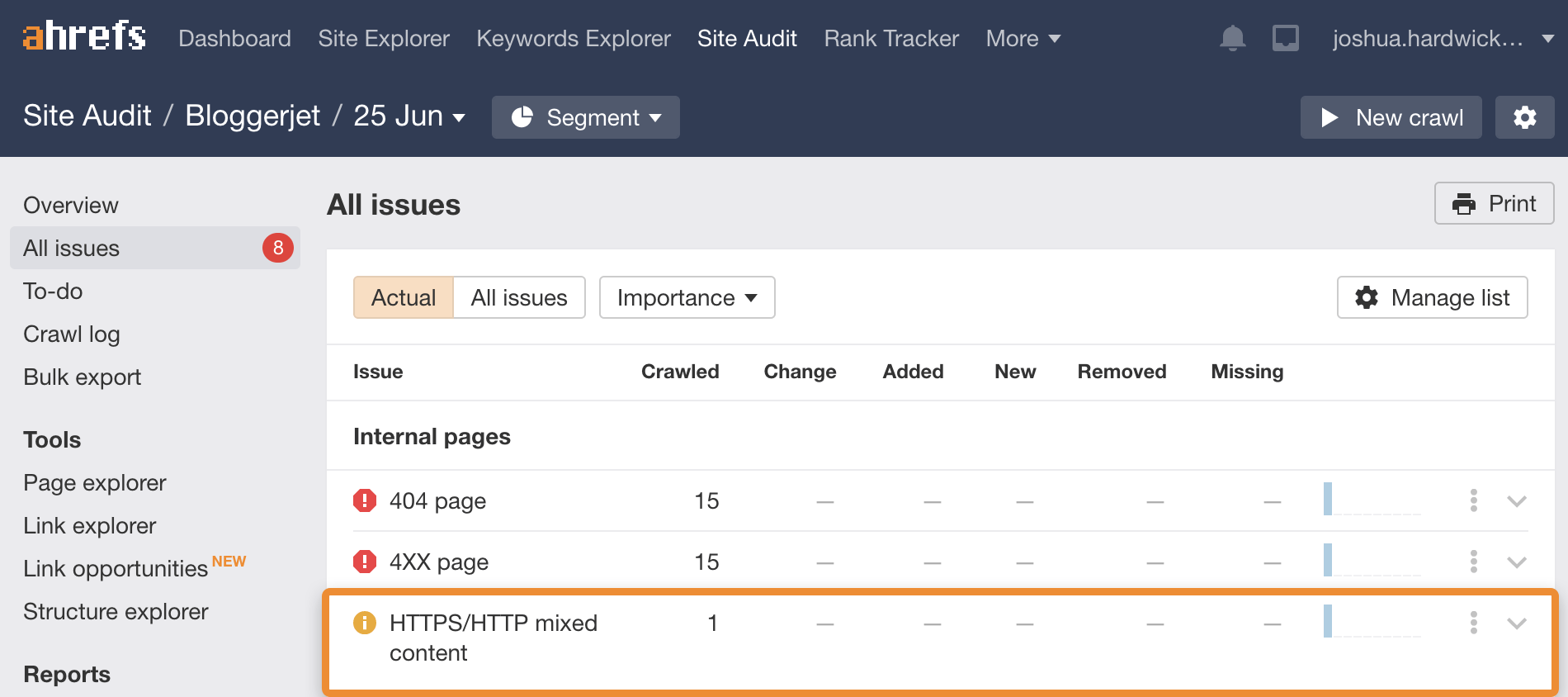

However, if you have mixed content issues on one page, it’s quite likely that other pages are affected too. To check if that’s the case, crawl your site with Ahrefs Webmaster Tools. It checks for 100+ predefined SEO issues, including HTTP/HTTPS mixed content.

Recommended reading: What is HTTPS? Everything You Need to Know

For example, this post from Buffer appears at two locations:

https://buffer.com/library/social-media-manager-checklist

https://buffer.com/resources/social-media-manager-checklist

Despite what a lot of people think, Google doesn’t penalize sites for having duplicate content. They’ve confirmed this on multiple occasions.

But duplicate content can cause other issues, such as:

- Undesirable or unfriendly URLs in search results;

- Backlink dilution;

- Wasted crawl budget;

- Scraped or syndicated content outranking you.

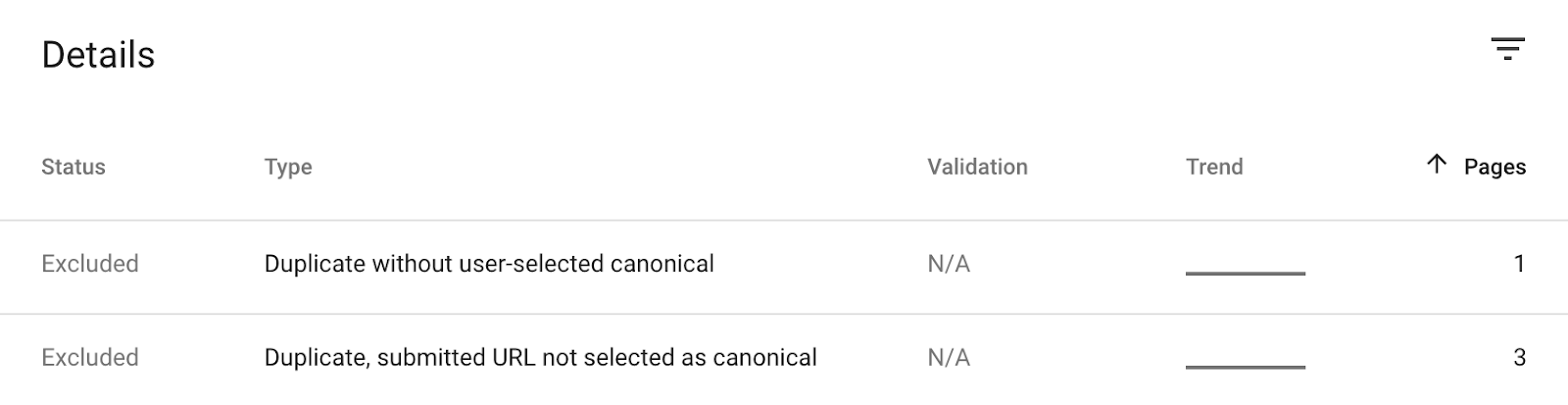

You can see pages with duplicate content issues in Google Search Console. Just go to the Coverage report, toggle to view excluded URLs, then look for issues related to duplicates.

Google explains what these issues mean and how to fix them here.

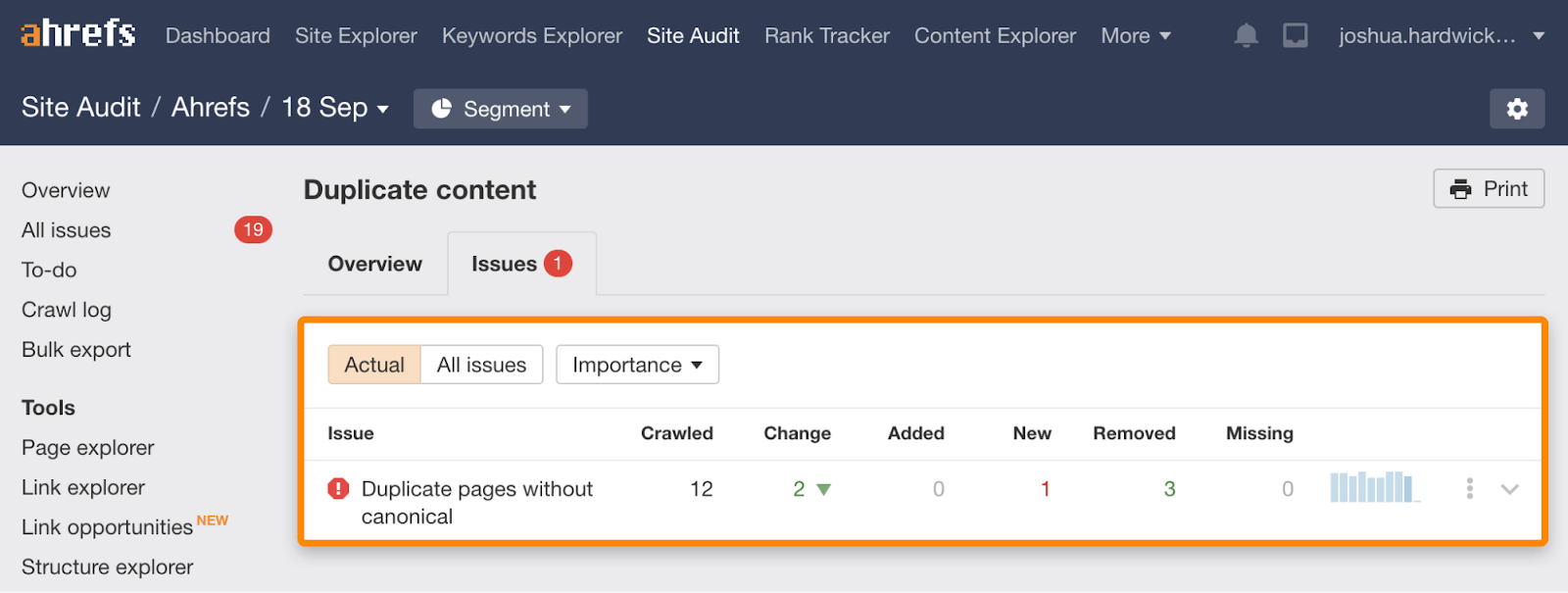

However, Search Console only tells you about URLs that Google has recognized as duplicates. There could very well be other duplicate content issues that Google hasn’t noticed. To find these, run a free crawl with Ahrefs Webmaster Tools and check the Duplicate content report.

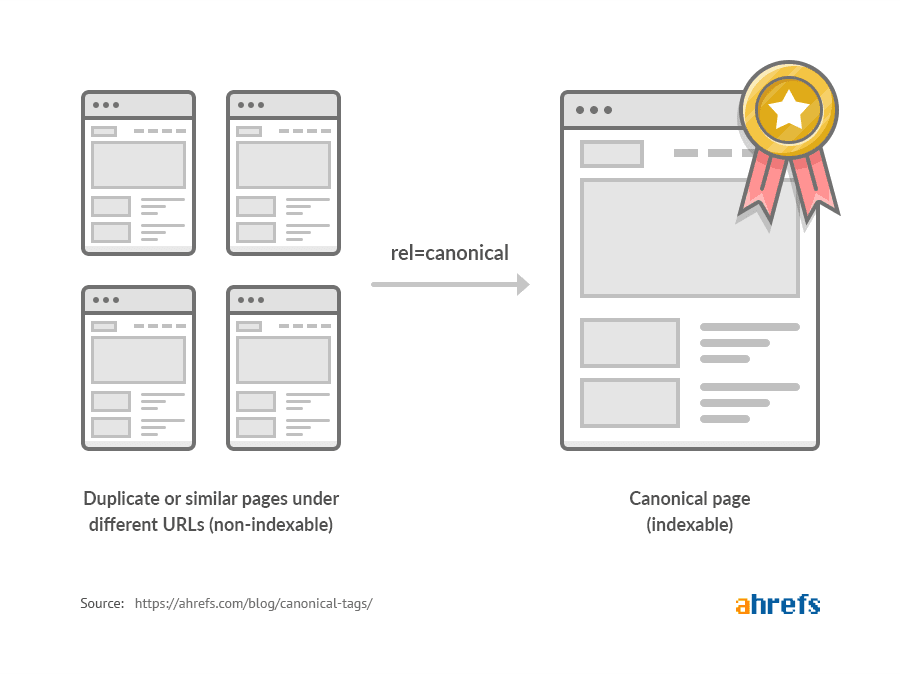

Fix the issues by choosing one URL within each group of duplicates to be the ‘canonical’ (main) version.

Further reading

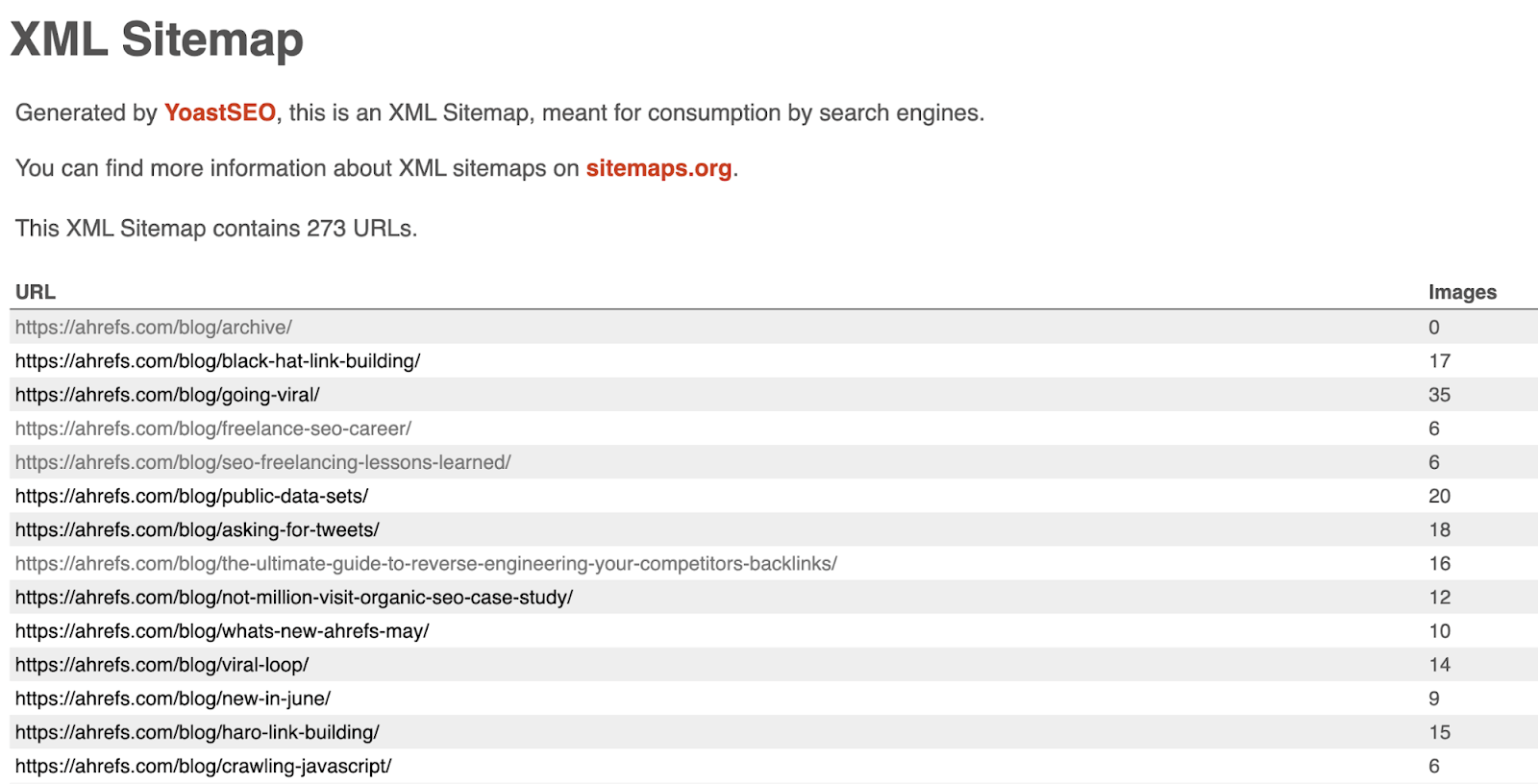

Here’s what our blog’s sitemap looks like:

Many people question the importance of sitemaps these days, as Google can usually find most of your content even without one. However, a Google representative confirmed the important of sitemaps in 2019, stating that they’re the second most important source of URLs for Google:

Sitemaps are the second Discovery option most relevant for Googlebot @methode #SOB2019— Enrique Hidalgo (@EnriqueStinson) June 15, 2019

But why is this?

One reason is that sitemaps usually contain ‘orphan’ pages. These are pages that Google can’t find through crawling because they have no internal links from crawlable pages on your website.

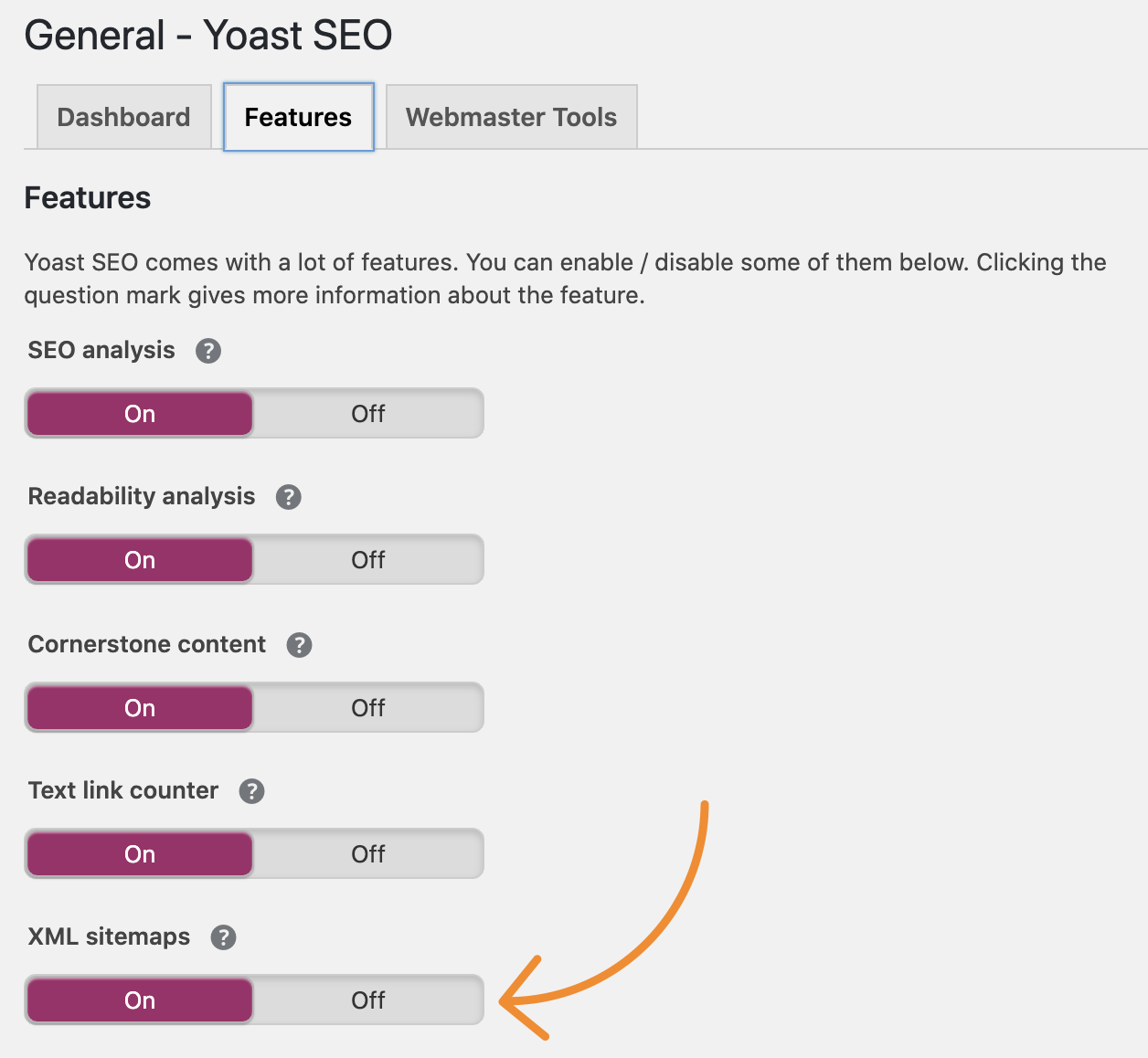

Most modern CMS’ including Wix, Squarespace, and Shopify automatically generate a sitemap for you. If you’re using WordPress, you’ll need to create one using a popular SEO plugin like Yoast or RankMath.

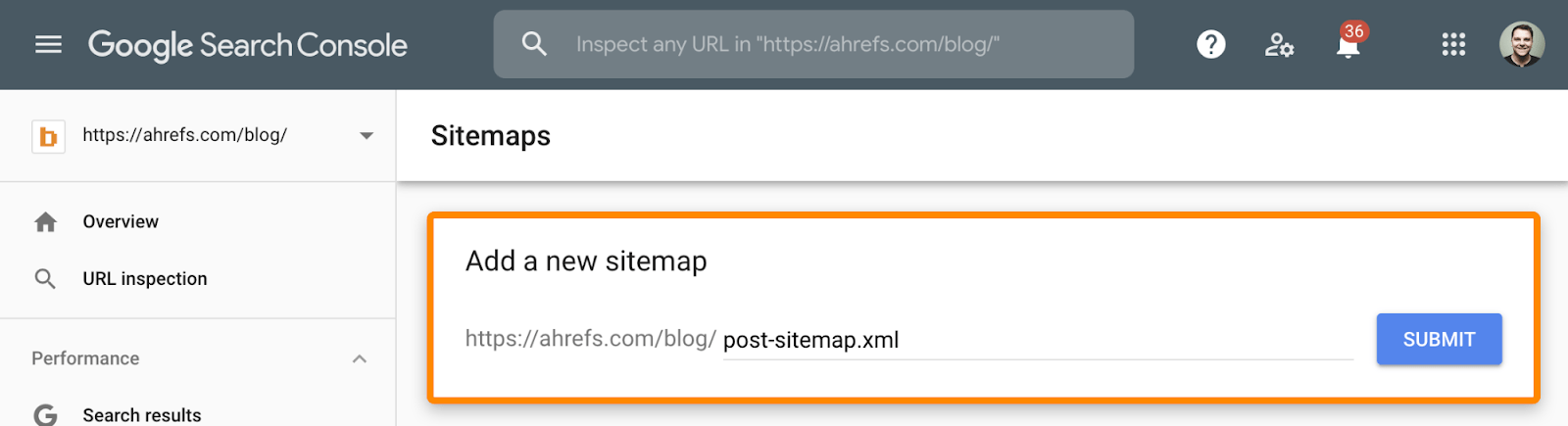

You can then submit that to Google through Search Console.

It’s worth noting that Google also sees the URLs in sitemaps as suggested canonicals. This can help combat duplicate content issues (see the previous point), but it’s still best practice to use canonical tags where possible.

Recommended reading: How to Create an XML Sitemap (and Submit It to Google)

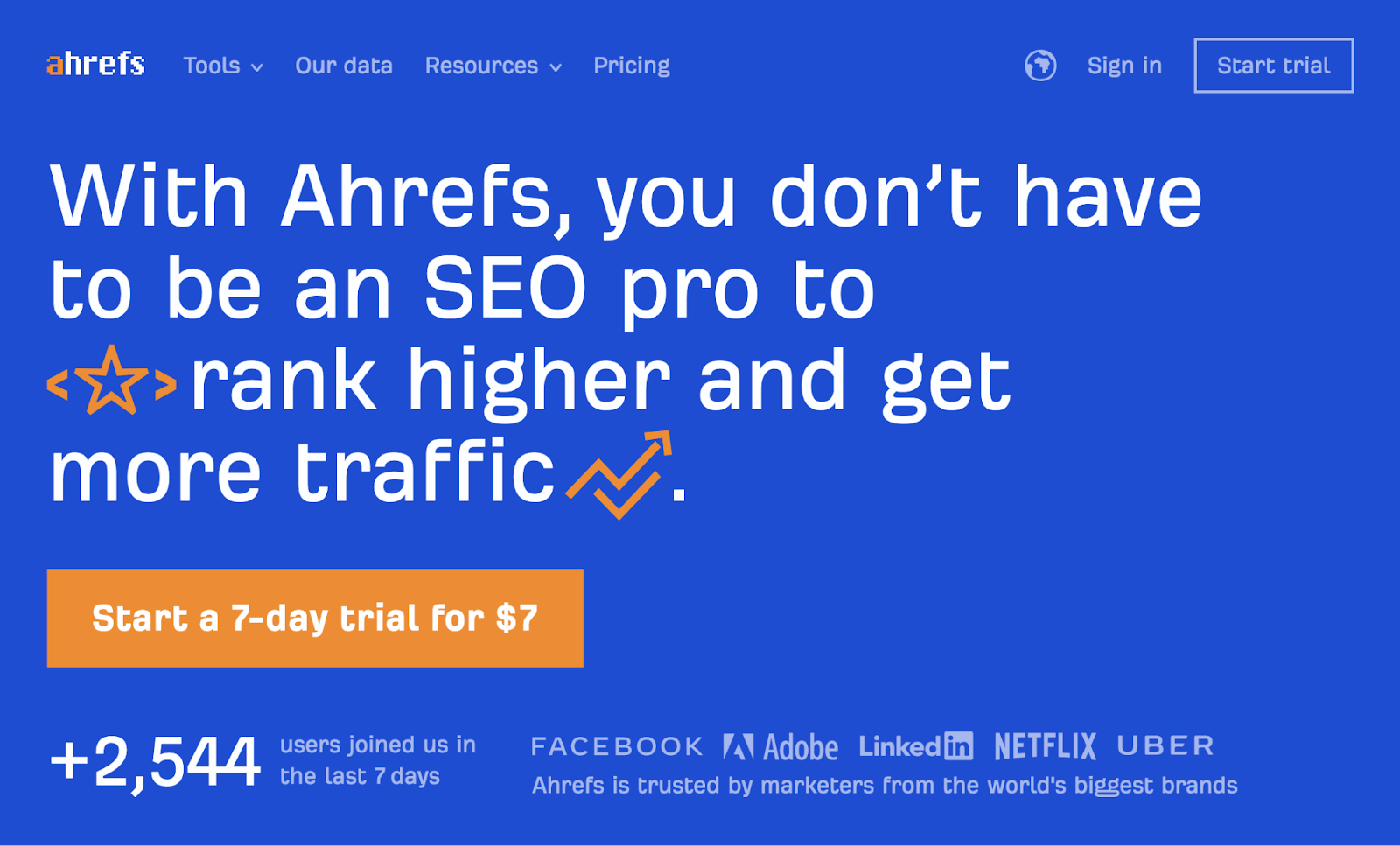

For example, we have versions of our homepage in multiple languages:

Each of these variations uses hreflang to tell search engines about their language and geographic targeting.

There are two main reasons why hreflang is important for SEO:

- It helps combat duplicate content. Let’s say that you have two similar pages. Without hreflang, Google will likely see these pages as duplicates and index only one of them.

- It can help rankings. In this video, Google’s Gary Ilyes explains that pages in a hreflang cluster share ranking signals. That means if you have an English page with lots of links, the Spanish version of that page effectively shares those signals. That may help it to rank in Google in other countries.

Implementing hreflang is easy. Just add the appropriate hreflang tags to all versions of the page.

For instance, if you have versions of your homepage in English, Spanish, and German, you would add these hreflang tags to all of those pages:

<link rel="alternate" hreflang="x-default" href="https://yourwebsite.com" /> <link rel="alternate" hreflang="es” href="https://yourwebsite.com/es/" /> <link rel="alternate" hreflang="de” href="https://yourwebsite.com/de/" />

Learn more about implementing hreflang and multilingual SEO in the resources below.

Further reading

Learn more about 301 and 302 redirects in the resources below:

Further reading

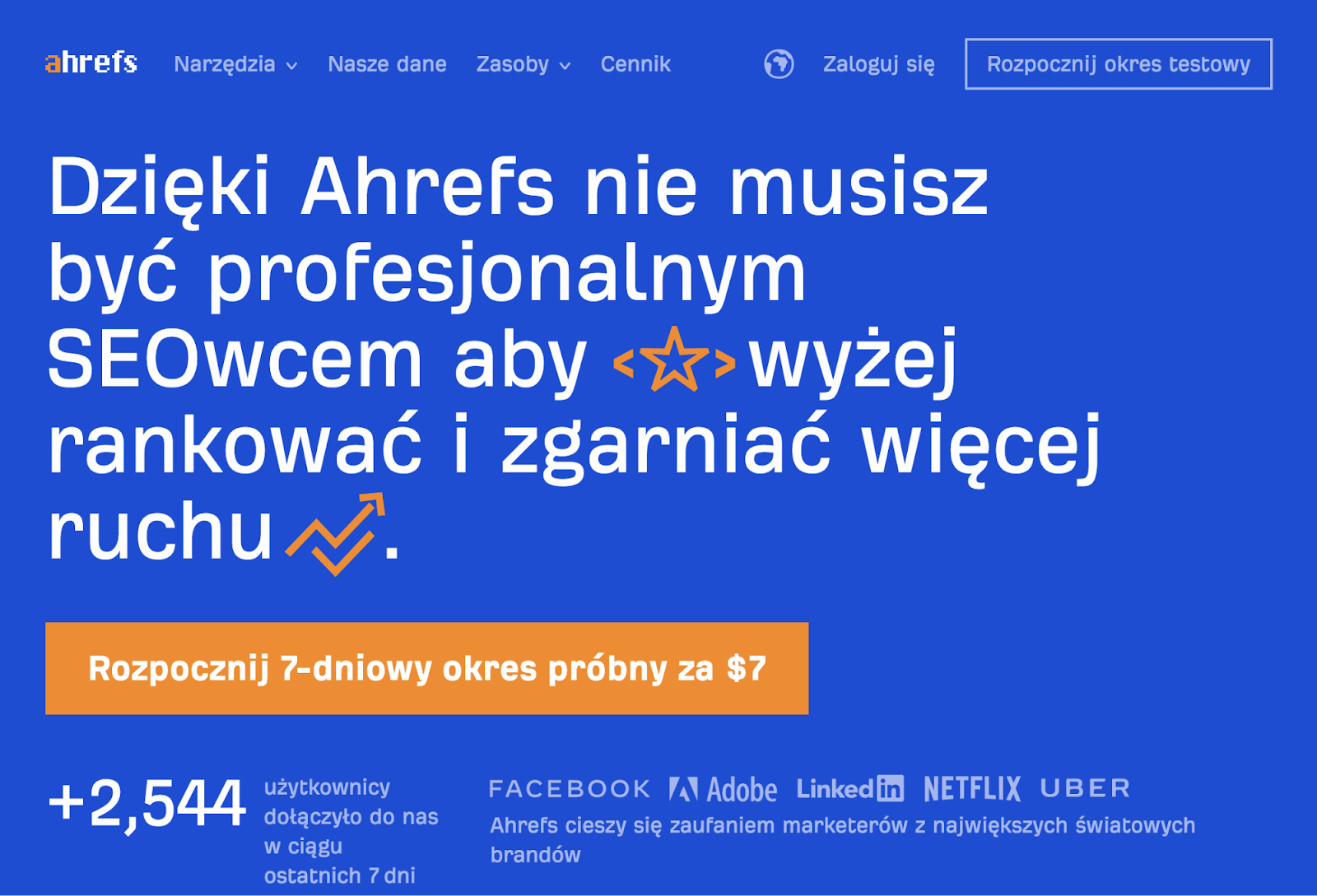

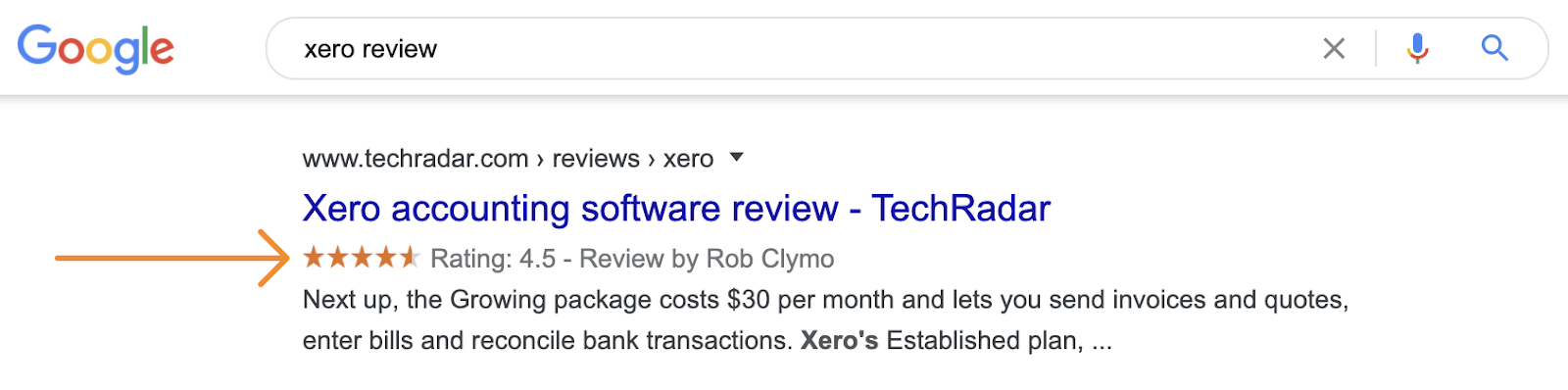

The benefit of rich snippets is more real estate in the search results, and sometimes an improved clickthrough rate.

However, Google only shows rich snippets for certain types of content, and only if you provide them with information using schema markup. If you haven’t heard of schema markup before, it’s additional code that helps search engines to better understand and represent your content in the search results.

For example, if you had a recipe for Kung Pao Chicken on your site, you could add this markup to give Google information about cooking time, calories, and more:

<script type="application/ld+json">

{

"@context": "https://schema.org/",

"@type": "Recipe",

"name": "Kung Pao Chicken",

"image": [

"https://yourwebsite.com/kung-pao-chicken.png"

],

"description": "A delicious recipe for King Pao Chicken."

},

"prepTime": "PT0M",

"cookTime": "PT20M",

"totalTime": "PT20M",

"nutrition": {

"@type": "NutritionInformation",

"calories": "383 cal"

},

"aggregateRating": {

"@type": "AggregateRating",

"ratingValue": "4.8",

"ratingCount": "25"

}

}

</script>

Not only does this provide Google with more information about your page, but it would also make it eligible for rich snippets like this:

Learn more about implementing rich snippet schema in the guides below.

Further reading

Orphaned pages have no internal links from crawlable pages on your website. As a result, search engines can’t find or index them (unless they have backlinks from other websites).

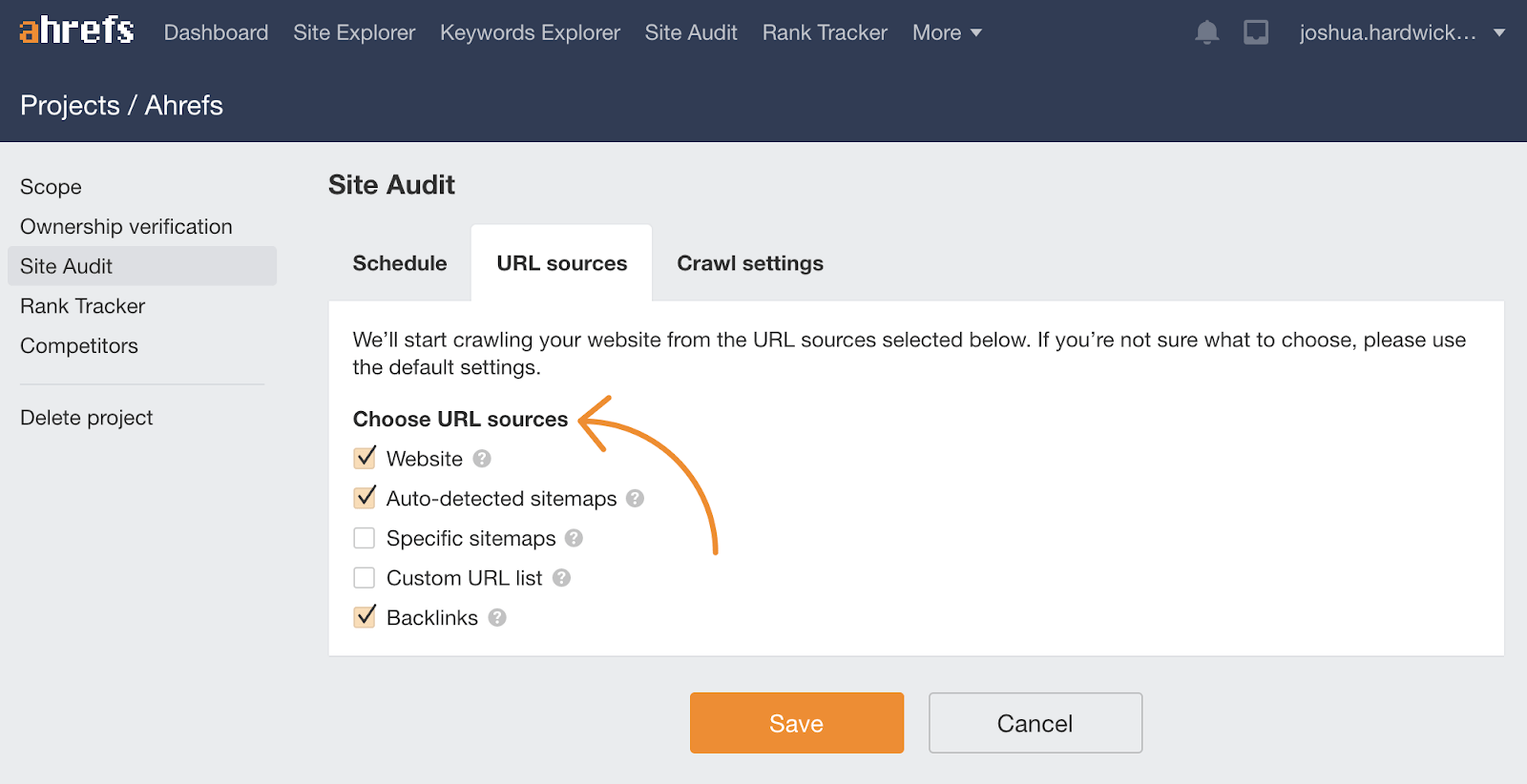

It’s often difficult to find orphaned pages with most auditing tools, as they crawl your site much like search engines do. However, if you’re using a CMS that generates a sitemap for you, you can use this as a source of URLs in Ahrefs’ Site Audit. Just check the option to crawl auto-detected sitemaps and backlinks in the crawl settings.

Sidenote.

If the location of your sitemap isn’t in your robots.txt file, and isn’t accessible at yourwebsite.com/sitemap.xml, then you should check the “Specific sitemaps” option in the crawl settings and paste in your sitemap URL(s).

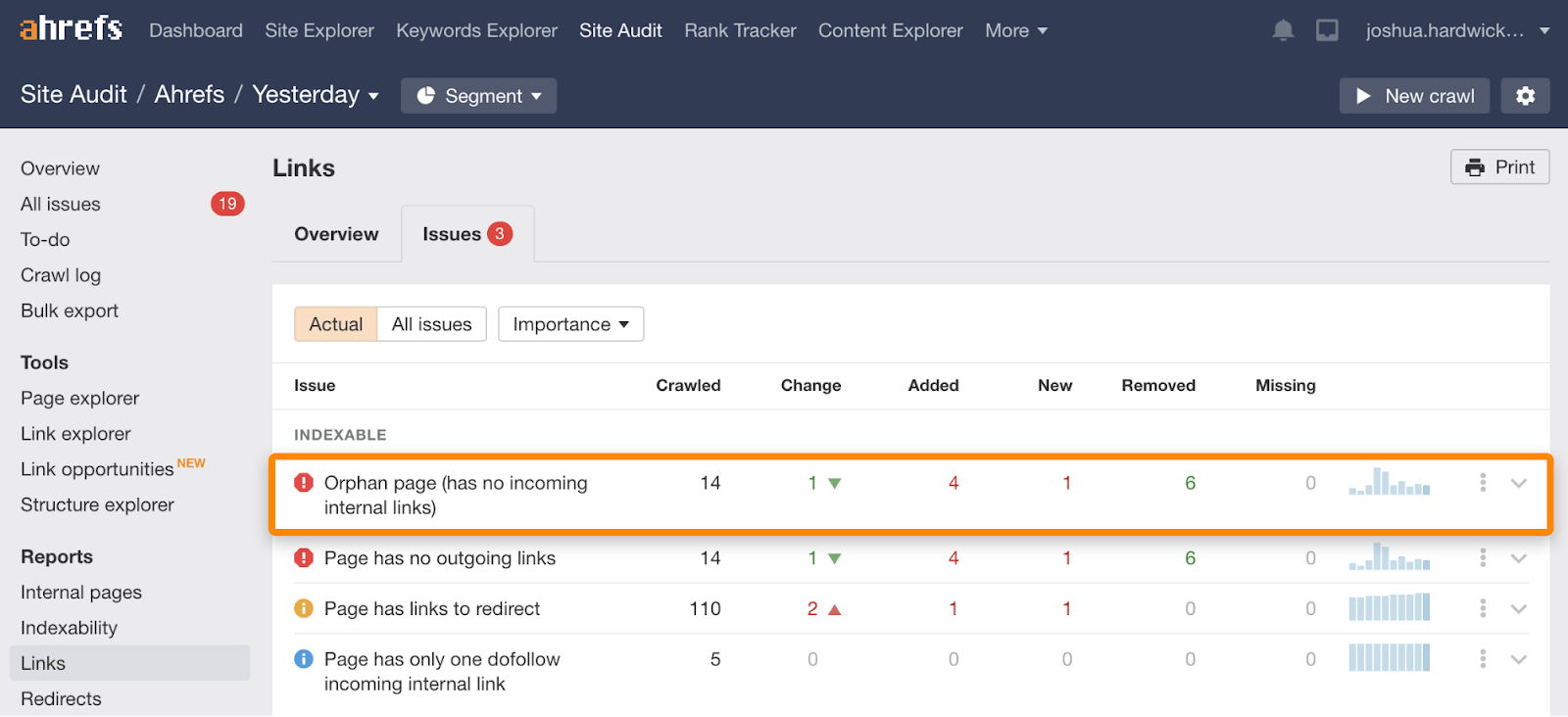

When the crawl is complete, go to the Links report and check for the “Orphan page (has no incoming internal links)” issue.

If any of the URLs are important, you should incorporate them into your site structure. This might mean adding internal links from your navigation bar or other relevant crawlable pages. If they’re not important, then you can delete, redirect, or ignore them. It’s up to you.

Recommended reading: Internal Links for SEO: An Actionable Guide

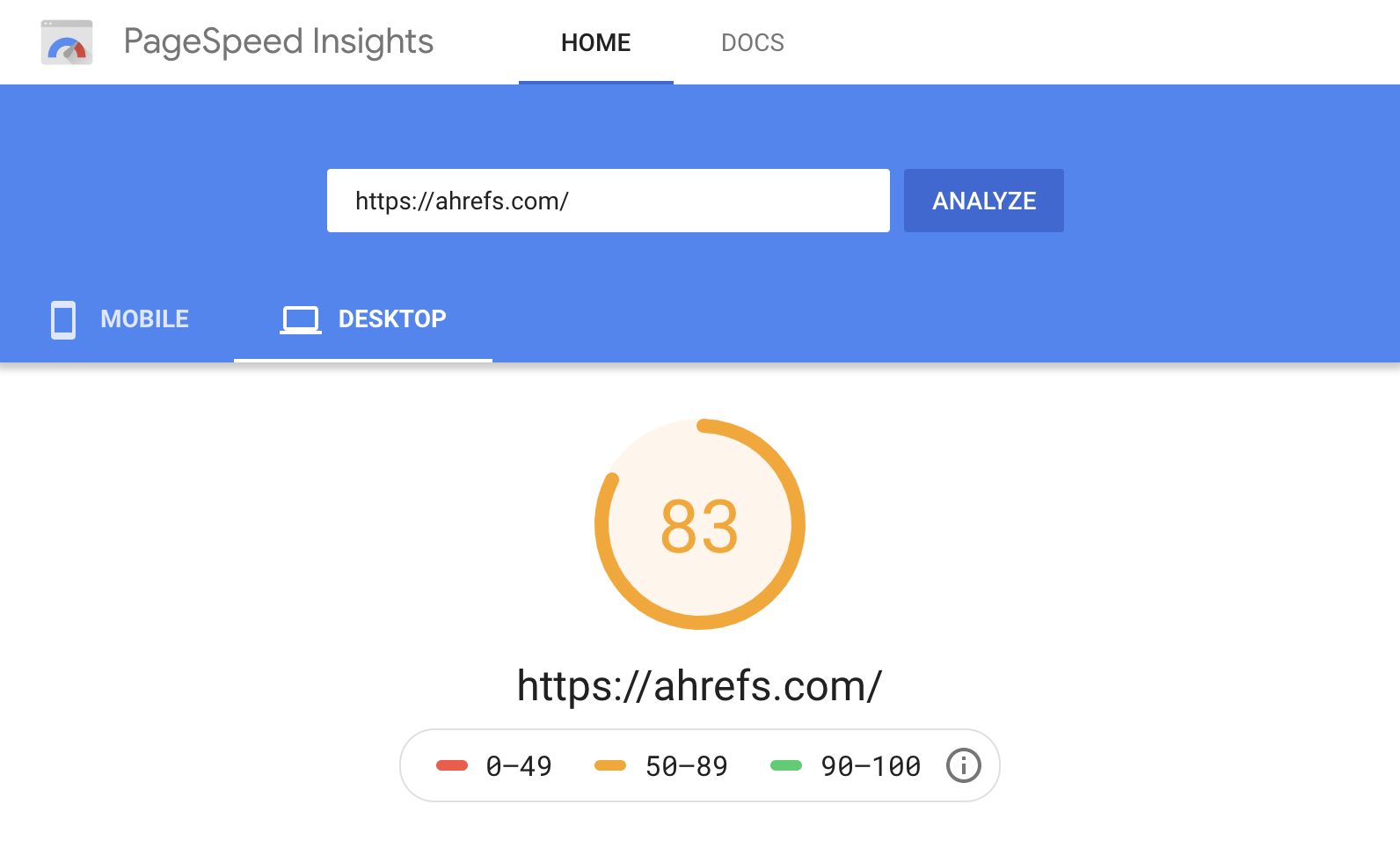

Unfortunately, page speed is a complex topic. There are many tools and metrics you can use to benchmark speeds, but Google’s Pagespeed Insights is a reasonable starting point. It gives you a performance score from 0–100 on desktop and mobile and tells you about the areas that could use some improvement.

But rather than focusing on individual areas, let’s cover a few things that will likely have the most significant positive impact on your page speed for the least effort.

- Switch to a faster DNS provider. Cloudflare is a good (and free) option. Just sign up for a free account, then swap your nameservers with your domain registrar.

- Install a caching plugin. CCaching stores files temporarily so they can be delivered to visitors faster and more efficiently. If you’re using WordPress, WP Rocket and WP Super Cache are two good options.

- Minify HTML, CSS, and JavaScript files. Minification removes whitespace and comments from code to reduce file sizes. You can do this with WP Rocket or Autoptimize.

- Use a CDN. A Content Distribution Network (CDNs) store copies of your web pages on servers around the globe. It then connects visitors to the nearest server so there’s less distance for the requested files to travel. There are lots of CDN providers out there, but Cloudflare is a good option.

- Compress your images. Images are usually the biggest files on a web page. Compressing them reduces their size and ensures they take as little time to load as possible. There are plenty of image compression plugins out there, but we like Shortpixel.

Learn more about improving page speed in the video and linked resources below.

Recommended reading: How to Improve Page Speed from Start to Finish (Advanced Guide)

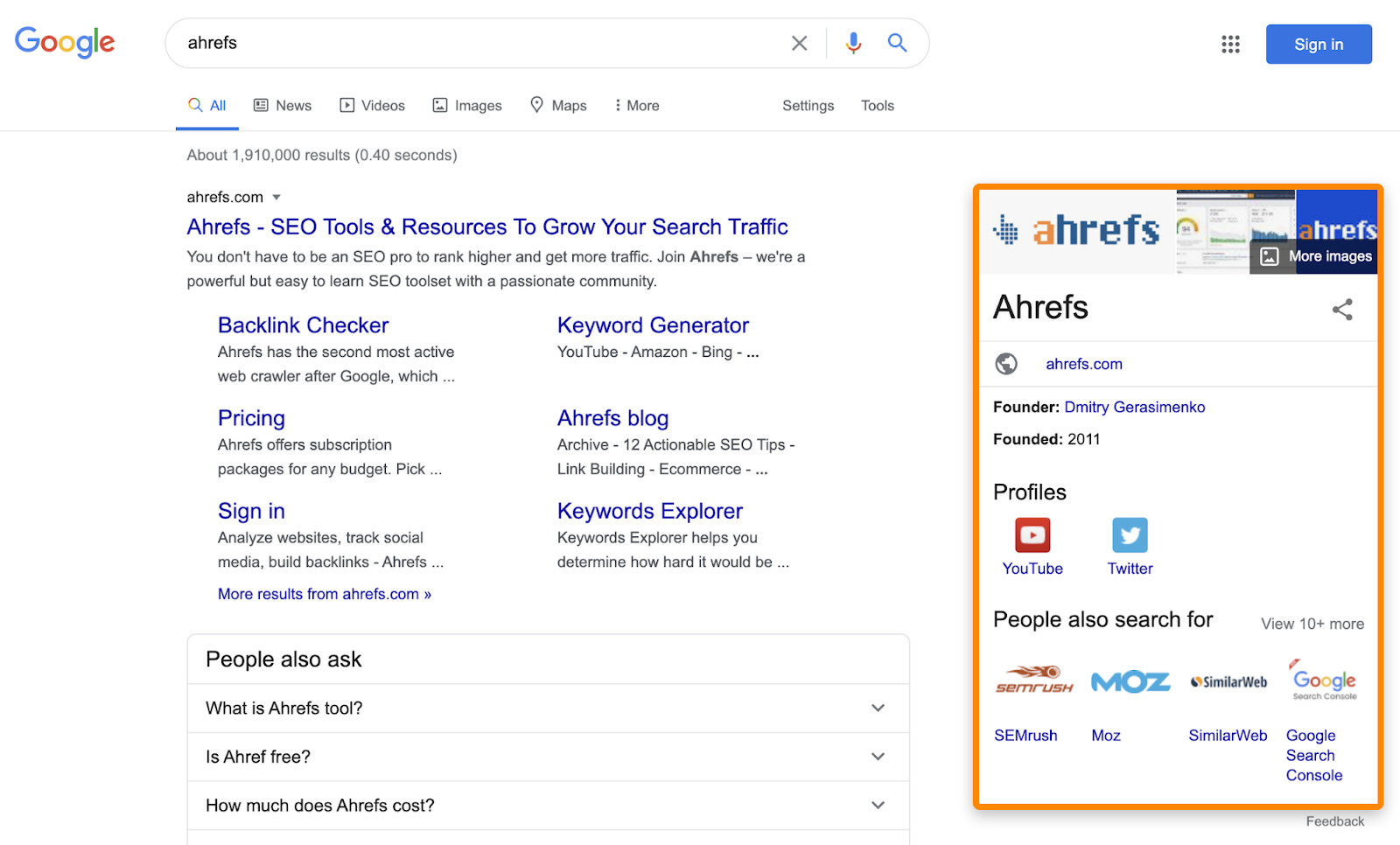

While there’s no definitive process for getting in the Knowledge Graph, using organization markup can help.

You can add this with popular WordPress plugins like Yoast and RankMath, or create and add it manually using a schema markup generator.

Just make sure to:

- Use at least the name, logo, url and sameAs properties

- Include all social profiles as your sameAs reference (and Wikidata and Wikipedia pages where possible)

- Validate the markup using Google’s structured data testing tool.

Here’s the organization markup we use:

<script type="application/ld+json">

{ "@context": "http://schema.org", "@type": "Organization", "name": "Ahrefs", "description": "Ahrefs is a software company that develops online SEO tools and free educational materials for marketing professionals.", "url": "https://ahrefs.com", "logo": "https://cdn.ahrefs.com/images/logo/logo_180x80.jpg", "email": "support@ahrefs.com", "address": { "@type": "PostalAddress", "addressCountry": "SG", "postalCode": "048581", "streetAddress": "16 Raffles Quay" }, "founder": { "@type": "Person", "name": "Dmitry Gerasimenko", "gender": "Male", "jobTitle": "CEO", "image": "https://cdn.ahrefs.com/images/team/dmitry-g.jpg", "sameAs": [ "https://twitter.com/botsbreeder", "https://www.linkedin.com/in/dmitrygerasimenko/" ] }, "foundingDate": "2010-07-15", "sameAs" : [ "https://www.crunchbase.com/organization/ahrefs", "https://www.facebook.com/Ahrefs", "https://www.linkedin.com/company/ahrefs", "https://twitter.com/ahrefs", "https://www.youtube.com/channel/UCWquNQV8Y0_defMKnGKrFOQ" ], "contactPoint" : [ { "@type" : "ContactPoint", "contactType" : "customer service", "email": "support@ahrefs.com", "url": "https://ahrefs.com" } ]

}

</script>

It doesn’t matter too much which page you add the markup to, but the homepage, contact, or about page is usually your best bet. There’s no need to include it on every page, as Google’s John Mueller confirmed in a 2019 Webmaster Central hangout.

Recommended reading: Google’s Knowledge Graph Explained: How It Influences SEO

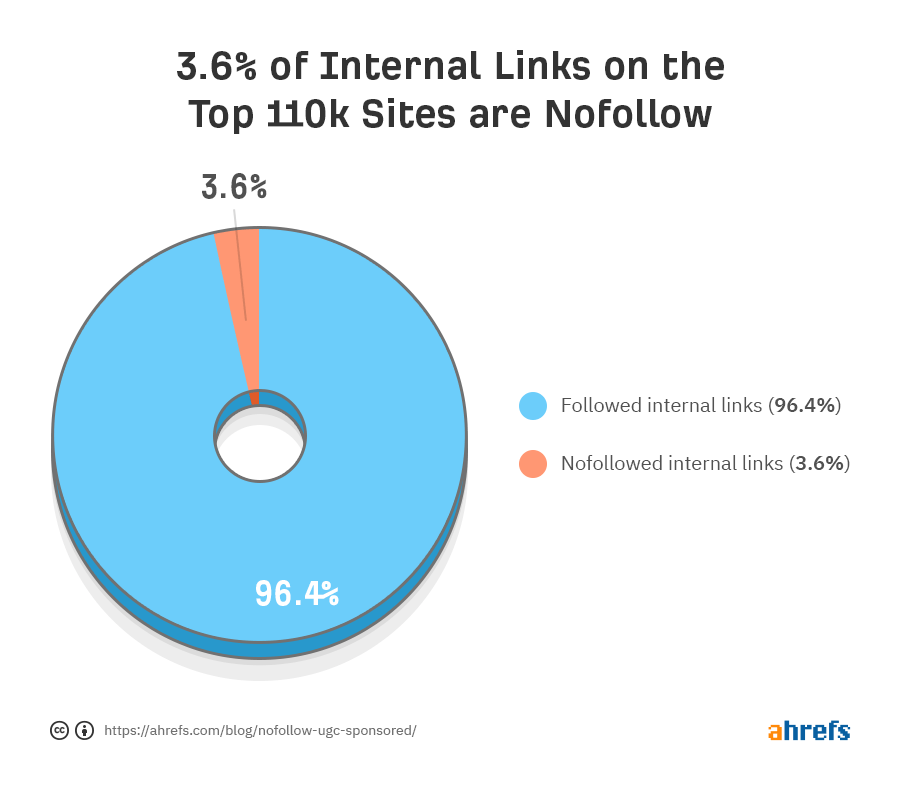

For that reason, they shouldn’t be used for internal links. Yet, according to our study of the top 110,000 websites, 3.6% of internal links are nofollowed.

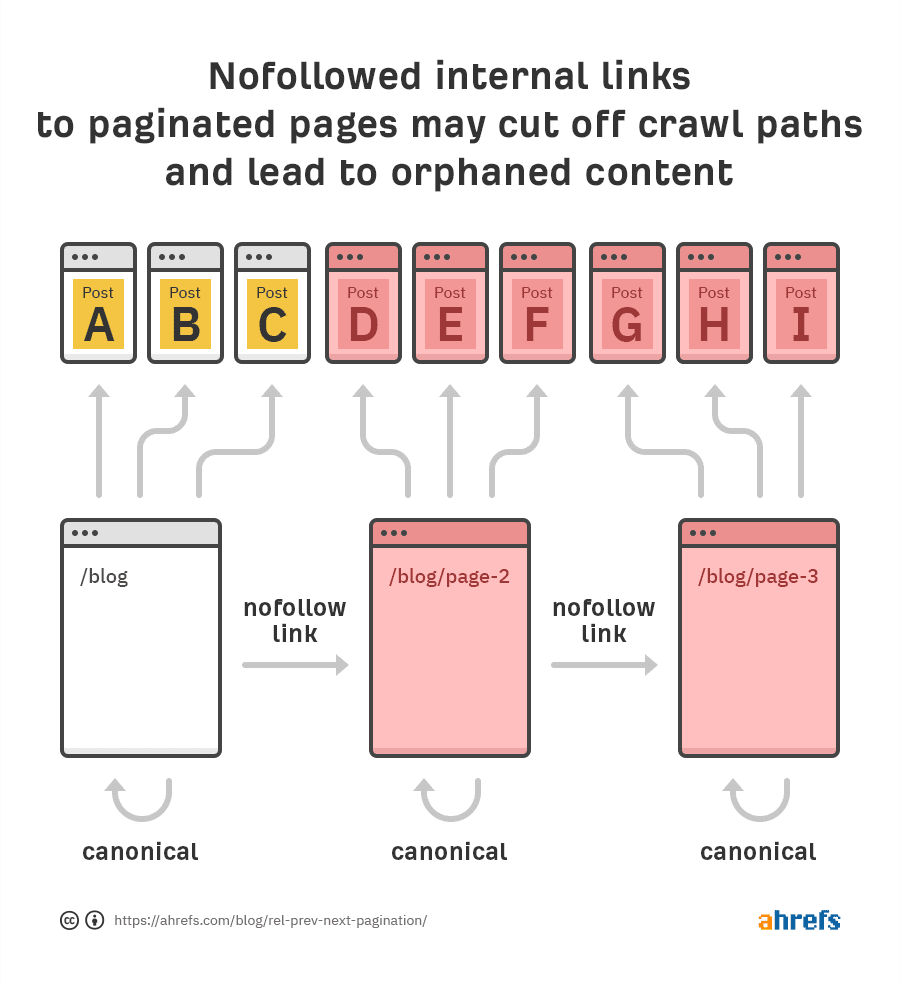

Many website owners do this to try to block indexing of pages, but nofollow doesn’t work like that. Using nofollow on internal links can only do harm, as it could cut off crawling and lead to orphaned content.

This is a common issue when it comes to pagination.

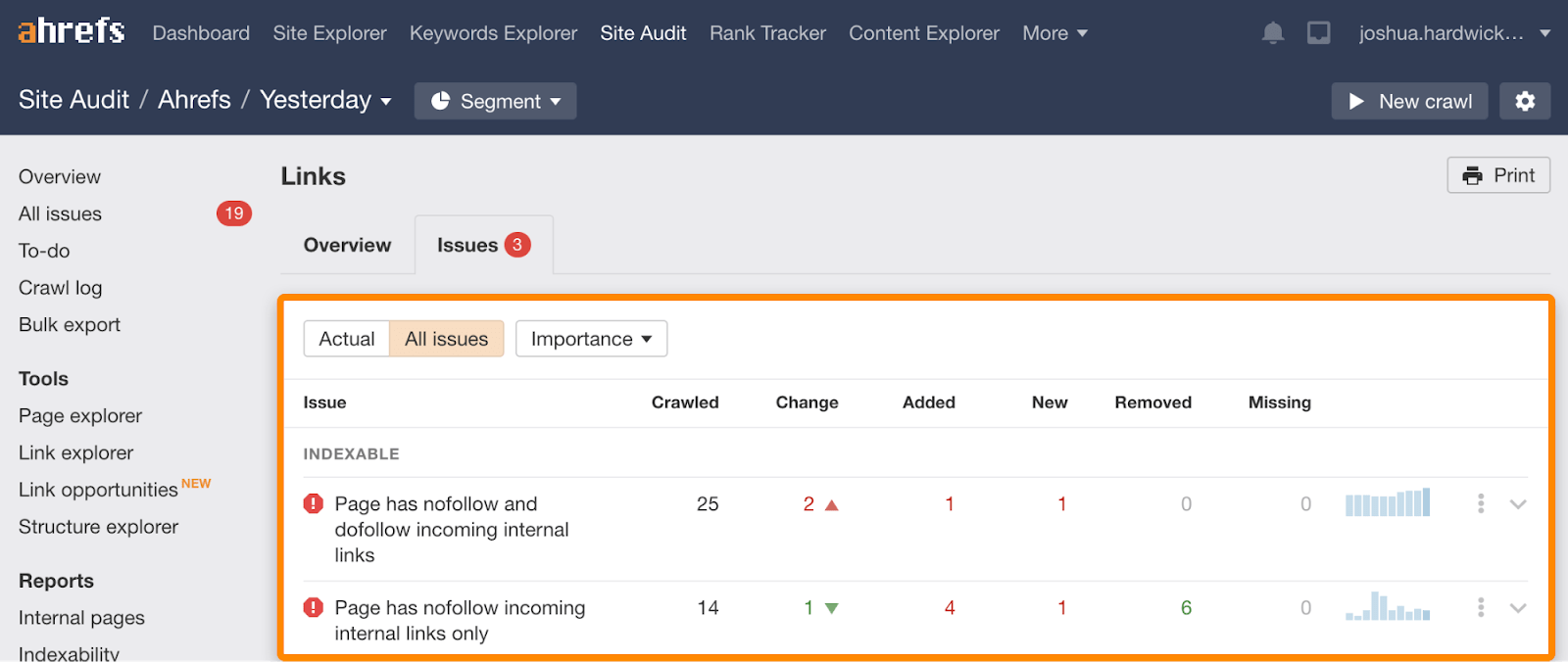

To check your site for nofollowed internal links, run a crawl in Ahrefs Webmaster Tools, then go to the Links report and look for related issues.

Fixing this problem is easy. Just remove the nofollow attribute from the affected links.

Further reading

Final thoughts

Technical SEO is a complex business, and there are many more best practices that we didn’t have time to cover in this post. However, the advice above should be enough to nip the most common technical mishaps in the bud and easily put your website’s performance in the top 10% compared to the rest of the web.

Got questions or suggestions? Ping me on Twitter.

Similar Posts

How to Optimize Your Linux Kernel with Custom Parameters

Linux stands at the heart of countless operating systems, driving everything from personal computers to servers and embedded systems across the globe. Its flexibility and open-source nature allow for extensive customization, much of which is achieved through the adept manipulation of kernel parameters. These boot options are not just tools for the Linux connoisseur but…

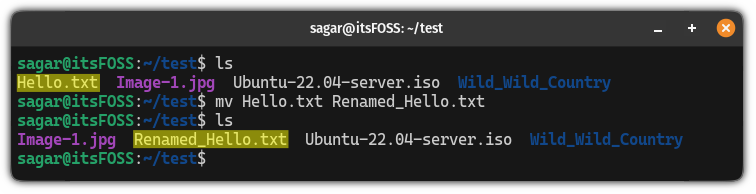

mv Command Examples

A simple and useful command in Linux to rename and move files. Learn more about it here.

How To Exclude a Schema From a PostgreSQL Database

The post How To Exclude a Schema While Restoring a PostgreSQL Database first appeared on Tecmint: Linux Howtos, Tutorials & Guides .

Sometimes when restoring a multi-schema database from a backup file, you may want to exclude one or more schemas, for one reason or the other.

The post How To Exclude a Schema While Restoring a PostgreSQL Database first appeared on Tecmint: Linux Howtos, Tutorials & Guides.

Top 13 Tiling Window Managers for Linux in 2023

The post 13 Best Tiling Window Managers for Linux in 2023 first appeared on Tecmint: Linux Howtos, Tutorials & Guides .

As the name suggests, Linux Window Managers are responsible for coordinating how application windows work. They run quietly in the background of your operating system,

The post 13 Best Tiling Window Managers for Linux in 2023 first appeared on Tecmint: Linux Howtos, Tutorials & Guides.

10 Best Download Managers for Linux in 2023

The post 10 Most Popular Download Managers for Linux in 2023 first appeared on Tecmint: Linux Howtos, Tutorials & Guides .

Download managers on Windows are one of the most needed tools that are missed by every newcomer to the Linux world, programs like Internet Download

The post 10 Most Popular Download Managers for Linux in 2023 first appeared on Tecmint: Linux Howtos, Tutorials & Guides.

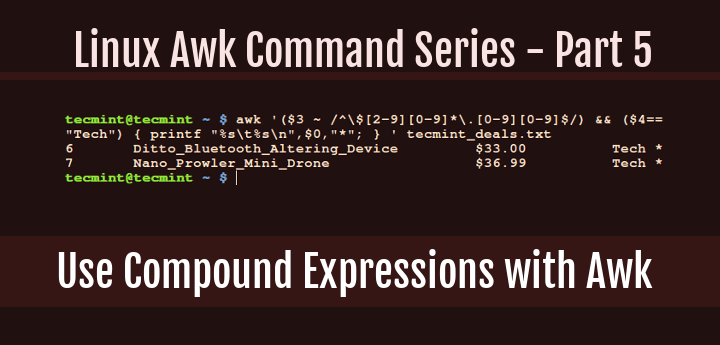

How to Use Compound Expressions with Awk in Linux – Part 5

The post How to Use Compound Expressions with Awk in Linux – Part 5 first appeared on Tecmint: Linux Howtos, Tutorials & Guides .

All along, we have been looking at simple expressions when checking whether a condition has been met or not. What if you want to use

The post How to Use Compound Expressions with Awk in Linux – Part 5 first appeared on Tecmint: Linux Howtos, Tutorials & Guides.