Locating Leviathan Files in Linux

Introduction

In the realm of Linux, where the command line is often the compass by which we navigate, the efficient management of disk space is crucial. Whether you’re sailing through personal projects or steering the ship of enterprise servers, large and forgotten files can be like hidden icebergs, threatening to sink your system’s performance. This article serves as a detailed chart to help you uncover these lurking data giants. By mastering a few essential tools and commands, you’ll be able to not only find large files but also make informed decisions about how to handle them.

Understanding File Sizes and Disk Usage in Linux

Before embarking on our voyage to track down large files, it’s essential to have a clear understanding of file size units. Linux measures file sizes in bytes, with common conversions being 1024 bytes to a kilobyte (KB), 1024 KB to a megabyte (MB), and so on up to terabytes (TB) and beyond. The du (disk usage) command is an invaluable tool in this journey, offering insights into the space consumed by files and directories. Similarly, df (disk free) tells us about the overall disk space and its availability, giving a bird’s-eye view of our storage landscape.

The find Command: Searching for Large Files

The find command in Linux is a powerful utility for seeking out files that meet specific criteria. To hone in on large files, we can employ the find command with size options:

find / -type f -size +100M

This command line incantation will list all files larger than 100 megabytes from the root directory. It’s possible to modify the search criteria for a range of sizes or to execute actions on the files that are found, such as removing them with -exec rm {} ; appended to the command.

The du Command: Assessing File and Directory Sizes

While find is excellent for pinpointing files, du dives deeper, allowing us to understand the sizes of directories as well:

du -h --max-depth=1 /var | sort -hr | head -10

This chain of commands will display the sizes of directories within /var, sort them in descending order, and show the top 10. This is incredibly useful for uncovering directories that have grown unexpectedly bulky.

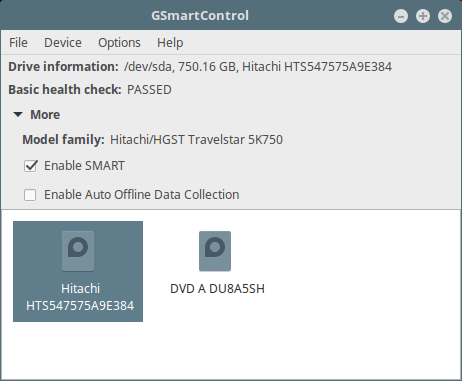

Graphical Tools for Finding Large Files

For those who prefer a visual approach, Linux doesn’t disappoint. Tools like Baobab (for GNOME users) and KDiskFree (for KDE) provide a graphical interface to analyze disk usage. They are often more intuitive for beginners and offer a visual breakdown of disk space consumption. Installation is typically a breeze through the system’s package manager, and usage is as simple as launching the application and selecting a drive to scan.

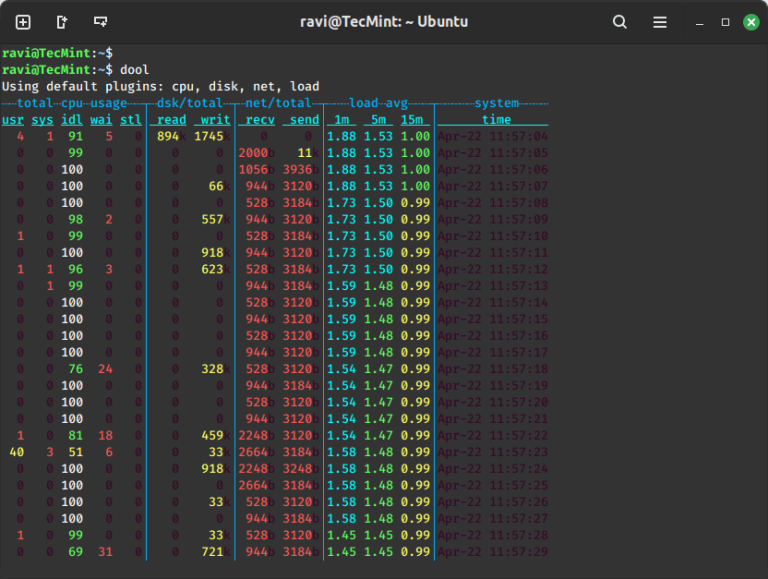

Advanced Methods: ncdu and ls Command Tricks

Beyond the basics lies ncdu, a simple yet robust utility that presents a disk usage analyzer with an ncurses interface, allowing users to navigate through the directory structure. Once installed via the package manager, you can launch it with ncdu /, and it will analyze the directory sizes interactively.

Additionally, the ls command can be coaxed into listing files by size with:

ls -lShr

Here, -l lists files in long format, -S sorts by file size, -h gives human-readable sizes, and -r reverses the order, showing the smallest files last.

Managing Large Files: Best Practices

Upon locating the titans of data, one must decide whether to compress, move, or delete them. It’s a good practice to back up files before taking any action, especially if they’re not well documented. Safely removing files can be done with the rm command, but one must always be cautious and ensure that the files are not required by any system processes or applications.

Automating the Hunt: Scripting for Regular Monitoring

To keep a constant watch for large files, one can write simple bash scripts that utilize the find or du commands and schedule them with cron jobs to run at regular intervals. The script can output its findings to a log file or even email a report to the system administrator.

Conclusion

With the tools and techniques outlined in this guide, you are now well-equipped to embark on a quest for large files within the Linux file system. Integrating these practices into your regular system maintenance will help ensure smooth sailing and prevent the potential chaos caused by unchecked data growth. As you become more familiar with these methods, you will no doubt discover additional tricks to keep your system efficient and responsive.